Your Cortex Contains 17 Billion Computers

Neural networks of neural networks

Brains receive input from the outside world, their neurons do something to that input, and create an output. That output may be a thought (I want curry for dinner); it may be an action (make curry); it may be a change in mood (yay curry!). Whatever the output, that “something” is a transformation of some form of input (a menu) to output (“chicken dansak, please”). And if we think of a brain as a device that transforms inputs to outputs then, inexorably, the computer becomes our analogy of choice.

For some this analogy is merely a useful rhetorical device; for others it is a serious idea. But the brain isn’t a computer. Each neuron is a computer. Your cortex contains 17 billion computers.

OK, what? Look at this:

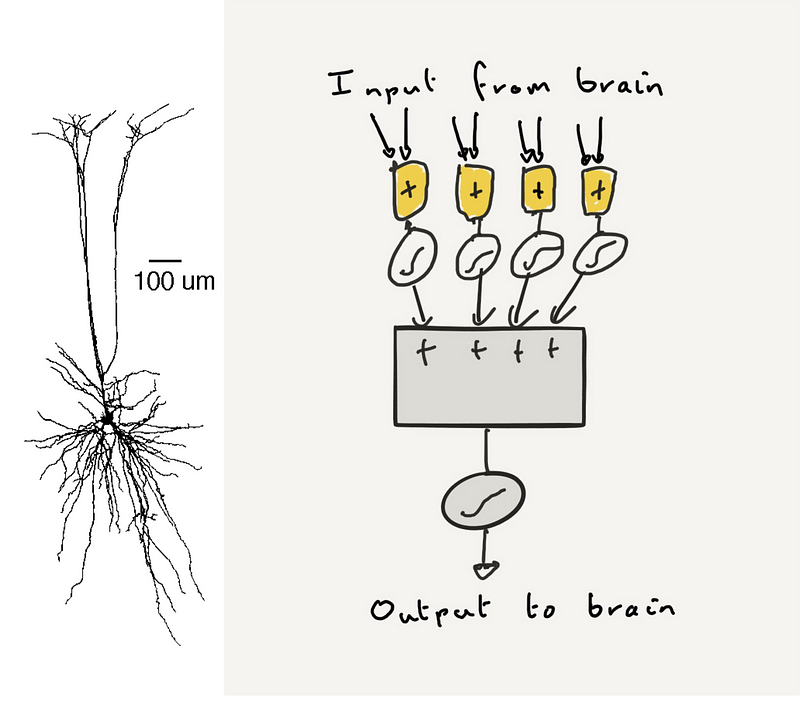

This is a picture of a pyramidal cell, the neuron that makes up most of your cortex. The blob in the centre is the neuron’s body; the wires stretching and branching above and below are the dendrites, the twisting cables that gather the inputs from other neurons near and far. Those inputs fall all across the dendrites, some right up close to the body, some far out on the tips. Where they fall matters.

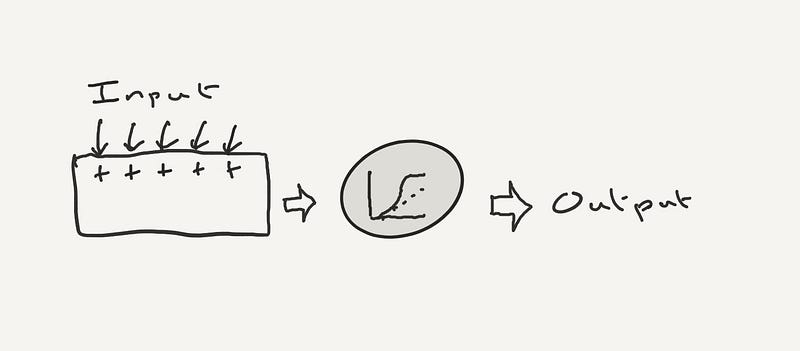

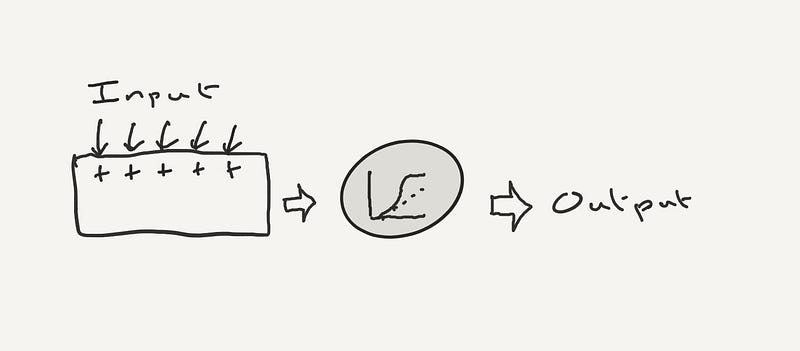

But you wouldn’t think it. When talking about how neurons work, we usually end up with the sum-up-inputs-and-spit-out-spike idea. In this idea, the dendrites are just a device to collect inputs. Activating each input alone makes a small change to the neuron’s voltage. Sum up enough of these small changes, from all across the dendrites, and the neuron will spit out a spike from its body, down its axon, to go be an input to other neurons.

It’s a handy mental model for thinking about neurons. It forms the basis for all artificial neural networks. It’s wrong.

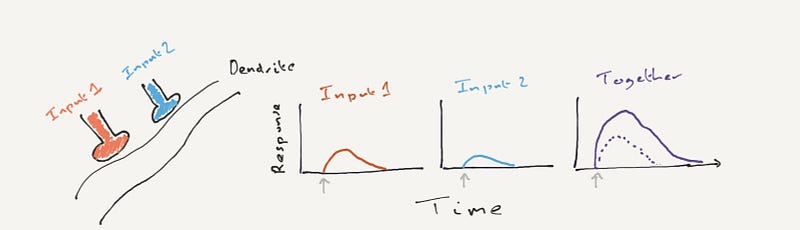

Those dendrites are not just bits of wire: they also have their own apparatus for making spikes. If enough inputs are activated in the same small bit of dendrite then the sum of those simultaneous inputs will be bigger than the sum of each input acting alone:

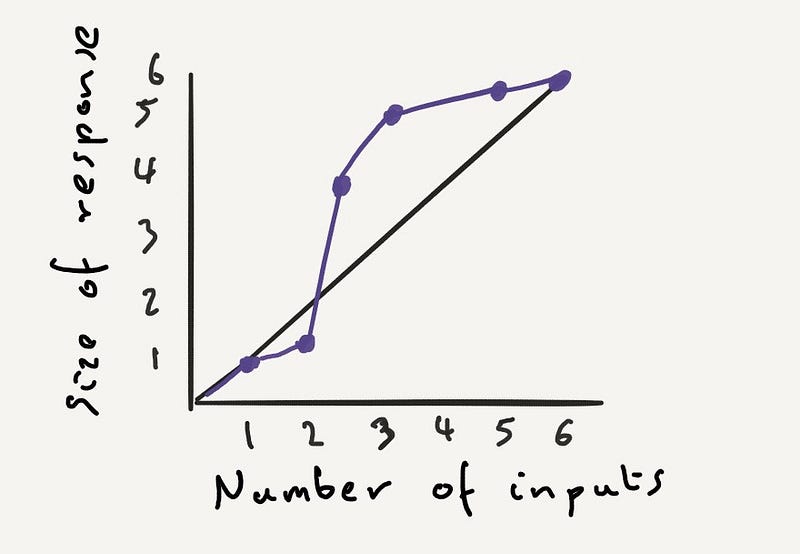

The relationship between the number of active inputs and the size of the response in a little bit of dendrite looks like this:

There’s the local spike: the sudden jump from almost no response to a few inputs, to a big response with just one more input. A bit of dendrite is “supralinear”: within a dendrite, 2+2=6.

We’ve known about these local spikes in bits of dendrite for many years. We’ve seen these local spikes in neurons within slices of brain. We’ve seen them in the brains of anaesthetised animals having their paws tickled (yes, unconscious brains still feel stuff; they just don’t bother to tell anyone). We’ve very recently seen them in the dendrites of neurons in animals that were moving about (yeah, Moore and friends recorded the activity in something a few micrometres across from the brain of a mouse that was moving about; crazy, huh?). A pyramidal neuron’s dendrites can make “spikes”.

So they exist: but why does this local spike change the way we think about the brain as a computer? Because the dendrites of a pyramidal neuron contain many separate branches. And each can sum-up-and-spit-out-a-spike. Which means that each branch of a dendrite acts like a little nonlinear output device, summing up and outputting a local spike if that branch gets enough inputs at roughly the same time:

Wait. Wasn’t that our model of a neuron? Yes it was. Now if we replace each little branch of dendrite with one of our little “neuron” devices, then a pyramidal neuron looks something like this:

Yes, each pyramidal neuron is a two layer neural network. All by itself.

Beautiful work by Poirazi and Mel back in 2003 showed this explicitly. They built a complex computer model of a single neuron, simulating each little bit of dendrite, the local spikes within them, and how they sweep down to the body. They then directly compared the output of the neuron to the output of a two-layer neural network: and they were the same.

The extraordinary implication of these local spikes is that each neuron is a computer. By itself the neuron can compute a huge range of so-called nonlinear functions. Functions that a neuron which just sums-up-and-spits-out-a-spike cannot ever compute. For example, with four inputs (Blue, Sea, Yellow, and Sun) and two branches acting as little non-linear devices, we can set up a pyramidal neuron to compute the “feature-binding” function: we can ask it to respond to Blue and Sea together, or respond to Yellow and Sun together, but not to respond otherwise — not even to Blue and Sun together or Yellow and Sea together. Of course, neurons receive many more than four inputs, and have many more than two branches: so the range of logical functions they could compute is astronomical.

More recently, Romain Caze and friends (I am one of those friends) have shown that a single neuron can compute an amazing range of functions even if it cannot make a local, dendritic spike. Because dendrites are naturally not linear: in their normal state they actually sum up inputs to total less than the individual values. They are sub-linear. For them 2+2 = 3.5. And having many dendritic branches with sub-linear summation also lets the neuron act as two-layer neural network. A two-layer neural network that can compute a different set of non-linear functions to those computed by neurons with supra-linear dendrites. And pretty much every neuron in the brain has dendrites. So almost all neurons could, in principle, be a two-layer neural network.

The other amazing implication of the local spike is that neurons know a hell of a lot more about the world than they tell us — or other neurons, for that matter.

Not long ago, I asked a simple question: How does the brain compartmentalise information? When we look at the wiring between neurons in the brain, we can trace a path from any neuron to any other. How then does information apparently available in one part of the brain (say, the smell of curry) not appear in all other parts of the brain (like the visual cortex)?

There are two opposing answers to that. The first is, in some cases, the brain is not compartmentalised: information does pop up in weird places, like sound in brain regions dealing with place. But the other answer is: the brain is compartmentalised — by dendrites.

As we just saw, the local spike is a non-linear event: it is bigger than the sum of its inputs. And the neuron’s body basically can’t detect anything that is not a local spike. Which means that it ignores most of its individual inputs: the bit which spits out the spike to the rest of the brain is isolated from much of the information the neuron receives. The neuron only responds when a lot of the inputs are active together in time and in space (on the same bit of dendrite).

If this was true, then we should see that dendrites respond to things that the neuron does not respond to. We see exactly this. In visual cortex, we know that many neurons respond only to things in the world moving at a certain angle (like most, but by no means all of us, they have a preferred orientation). Some neurons fire their spikes to things at 60 degrees; some at 90 degrees; some at 120 degrees. But when we record what their dendrites respond to, we see responses to every angle. The dendrites know a hell of a lot more about how objects in the world are arranged than the neuron’s body does.

They also look at a hell of a lot more of the world. Neurons in visual cortex only respond to things in a particular position in the world — one neuron may respond to things in the top left of your vision; another to things in the bottom right. Very recently Sonia Hofer and her team showed that while the spikes from neurons only happen in response to objects appearing in one particular position, their dendrites respond to many different positions in the world, often far from the neuron’s apparent preferred position. So the neurons respond only to a small fraction of the information they receive, with the rest tucked away in their dendrites.

Why does all this matter? It means that each neuron could radically change its function by changes to just a few of its inputs. A few get weaker, and suddenly a whole branch of dendrite goes silent: the neuron that was previously happy to see cats, for that branch liked cats, no longer responds when your cat walks over your bloody keyboard as you are working — and you are a much calmer, more together person as a result. A few inputs get stronger, and suddenly a whole branch starts responding: a neuron that previously did not care for the taste of olives now responds joyously to a mouthful of ripe green olive — in my experience, this neuron only comes online in your early twenties. If all inputs were summed together, than changing a neuron’s function would mean having the new inputs laboriously fight each and every other input for attention; but have each bit of dendrite act independently, and new computations become a doddle.

It means the brain can do many computations beyond treating each neuron as a machine for summing up inputs and spitting out a spike. Yet that’s the basis for all the units that make up an artificial neural network. It suggests that deep learning and its AI brethren have but glimpsed the computational power of an actual brain.

Your cortex contains 17 billion neurons. To understand what they do, we often make analogies with computers. Some use these analogies as cornerstones of their arguments. Some consider them to be deeply misguided. Our analogies often look to artificial neural networks: for neural networks compute, and they are made of up neuron-like things; and so, therefore, should brains compute. But if we think the brain is a computer, because it is like a neural network, then now we must admit that individual neurons are computers too. All 17 billion of them in your cortex; perhaps all 86 billion in your brain.

And so it means your cortex is not a neural network. Your cortex is a neural network of neural networks.

With thanks to Romain Caze for suggestions

No comments:

Post a Comment